The Next Outage is a journal of failure. Not because we admire collapse, but because we know it’s inevitable. Every system you rely on—whether it’s a billion-dollar cloud platform or a background job you wrote years ago — will fail someday. This blog is about those moments: what triggered them, how they unfolded, and most importantly, what we can learn before the next one arrives.

We like to imagine outages as explosions. Systems burst into flames, dashboards light up like Christmas trees, alarms scream in unison. But the truth is more subtle — and more unsettling. Outages rarely begin with a bang. They begin with a whisper.

The night began quietly. At 7:02 p.m., in a glass-walled office downtown, the last shift engineer at a Fortune 500 company grabbed his jacket and slipped out the door. The dashboard glowed green, the graphs were flat, and the pager was silent. It looked like a safe evening.

7:43 p.m. — The First Missed Lookup

A handful of customers refreshed their shopping carts, only to see an error message. Their browsers couldn’t resolve the company’s primary domain. DNS lookups were failing intermittently. The failures were scattered, uneven, almost random. Monitoring flagged a small spike in errors, but the system automatically retried, masking the issue. No one noticed.

8:15 p.m. — Cache Betrayal

DNS resolvers around the world began serving stale records. Some users still connected fine, but others were stranded outside the gates. Support tickets trickled in—just a few at first, easy to dismiss. An engineer on call logged in, saw services up, and chalked it up to “ISP issues.”

But inside the system, the time bomb was already ticking. The company’s primary DNS zone had failed to renew 24 hours earlier. A $12 oversight.

8:42 p.m. — The Outage Expands

The authentication service was the first to fall. Without working DNS, the login API couldn’t reach its token store. Users were suddenly locked out of accounts. Mobile apps froze on loading screens. Monitoring lit up, but alerts were noisy and contradictory: some regions still worked, others didn’t.

Confusion set in. Engineers pinged each other: “Are you seeing this?” “Works for me in Virginia but broken in Europe.” “Could this be a transit provider issue?”

9:07 p.m. — The Storm Breaks

By now, Twitter was on fire. Customers couldn’t log in, couldn’t check out, couldn’t even reach the homepage. The outage was global, but uneven, which made diagnosis harder. Screens in the war room flickered red. Someone finally dug deeper into the DNS records: the authoritative name servers weren’t answering at all.

Silence fell in the Slack war room. The engineers realized the unthinkable—no one had renewed the domain. The registrar had put it in a grace period.

9:31 p.m. — The Scramble

Calls were made. Credit cards fumbled. Renewals attempted. But DNS doesn’t heal instantly. TTLs, caches, recursive resolvers—millions of devices worldwide had memorized the wrong answers. Even after the domain was renewed, the poison lingered. Engineers flushed caches manually, redeployed containers, rebooted proxies, anything to force a refresh.

Meanwhile, executives were on video calls demanding answers. The CEO asked, “How long until customers are back online?” No one dared to commit to a number. DNS has no clock you can speed up.

11:52 p.m. — Systems Stagger Back

Slowly, painfully, services returned. By midnight, the site was accessible for most users. By 2:00 a.m., recovery was nearly complete. Engineers collapsed into their chairs, drained. The fix was embarrassingly simple: a missed $12 renewal. But the cost—in headlines, reputation, and lost transactions—was millions.

⸻

What They Could Have Done Differently

This outage wasn’t about complexity. It wasn’t about edge cases. It was about basic ownership and visibility. The entire incident could have been avoided if the team had:

- Put the domain under automated renewal with a corporate payment method (not an engineer’s personal card).

- Established monitoring that alerts when a domain or certificate is within 30 days of expiration.

- Assigned clear ownership for “external dependencies” like DNS, certificates, and registrars—services that don’t live in the main stack but are just as critical.

In short: the failure wasn’t technical. It was organizational.

⸻

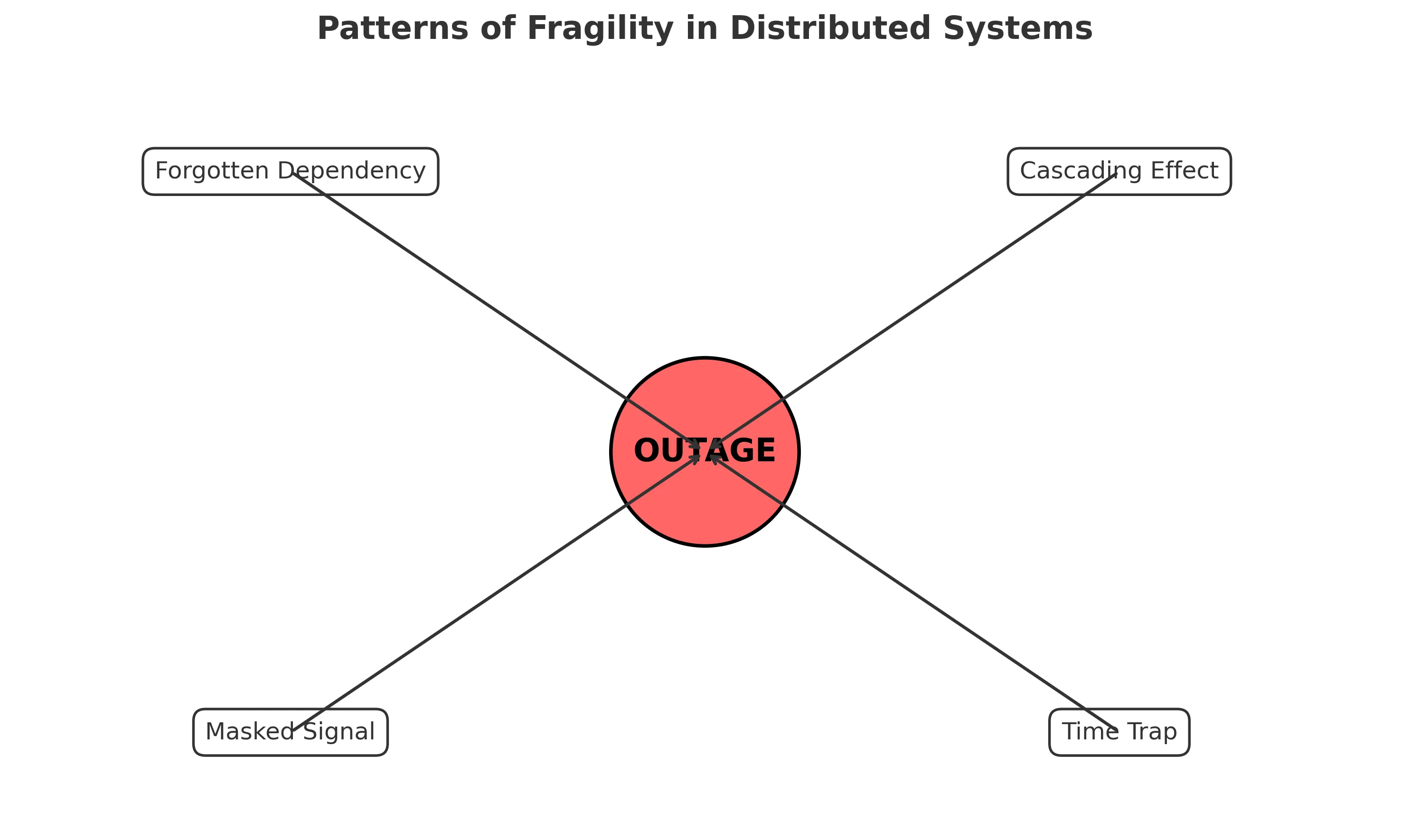

Failure Patterns to Recognize

This story follows several well-known outage patterns:

- The Forgotten Dependency: Critical components (DNS zones, TLS certs, cloud accounts) are assumed to be “someone else’s problem” until they expire.

- The Cascading Effect: One missing DNS record blocked authentication, which blocked logins, which stopped payments—multiplying the impact far beyond the root cause.

- The Masked Signal: Early lookup failures were hidden by retries and caching, delaying recognition until the problem was catastrophic.

- The Time Trap: Even after the domain was renewed, global DNS caches meant the recovery moved at the pace of the slowest resolver. Fixes don’t always apply instantly.

⸻

The Lesson

Distributed systems don’t fail because of the parts we watch. They fail because of the corners we ignore. The unglamorous, invisible components—DNS zones, certificates, cron jobs, API tokens—will betray you if they aren’t tracked with the same rigor as your core services.

Unless you address these weak points in your own environment, the next story won’t just be one you read here. It will be one you live through.